- Description

- Curriculum

- FAQ

Python

-

1Introduction to Python

-

2Data Types

-

3Statements

-

4Control structures: if/else, loops, comprehensions

-

5Functions and scope

-

6Error handling (try/except)

-

7Modules and packages

-

8Virtual environments (venv, pipenv)

-

9Working with Data

-

10File handling: read/write to .txt, .csv, .json, .xml

-

11CSV and JSON parsing

-

12Working with Excel files (openpyxl, pandas)

-

13Regular expressions (re module)

-

14Python Libraries for Data Engineering

-

15Pandas: data manipulation and transformation

-

16NumPy: numerical computing

-

17Databases and SQL with Python

-

18CRUD operations with SQL

-

19Query automation and batch execution

-

20Connecting to databases:

SQL

-

21SQL Basics

-

22Data types

-

23CRUD Operations

-

24Joins and Set Operations

-

25INNER JOIN

-

26LEFT JOIN, RIGHT JOIN

-

27FULL OUTER JOIN

-

28Set operations

-

29UNION vs UNION ALL

-

30INTERSECT

-

31EXCEPT / MINUS

-

32Aggregation and Grouping

-

33Aggregate functions

-

34GROUP BY

-

35HAVING

-

36nested subqueries

-

37Common Table Expressions (WITH)

-

38Window Functions

-

39ROW_NUMBER(), RANK(), DENSE_RANK()

-

40LEAD() / LAG()

-

41PARTITION BY

-

42ORDER BY

-

43Aggregates over windows

-

44Data Modeling and DDL (Data Definition Language)

-

45Creating databases and tables

-

46CREATE, ALTER, DROP, TRUNCATE

-

47Data types, constraints

-

48PRIMARY KEY

-

49FOREIGN KEY

-

50Indexes and Performance Tuning

Dataware House

-

51Data Warehousing and Analytics SQL

-

52Star and snowflake schemas

-

53Slowly Changing Dimensions (SCD Types 1, 2)

-

54OLAP vs OLTP query patterns

-

55Time series and cohort analysis in SQL

-

56Working with Modern SQL Engines

-

57MySQL

-

58Microsoft SQL Server

-

59BigQuery / Snowflake / Redshift / Databricks

-

60Connecting from Python (psycopg2, sqlalchemy, pandas.read_sql)

-

61Optional Advanced Topics

Big Data

-

62Introduction of Big Data

-

63Distributed System Architecture

-

64Configuration files

-

65Cluster Architecture

-

66Federation and High Availability

-

67Production Cluster Discussions

-

68YARN MR Application Execution Flow

-

69Anatomy of MapReduce Program

-

70Demo on MapReduce

-

71Input Splits, Relation between Input Splits and HDFS Blocks

-

72Map Reduce Algorithm

-

73Input Splits in MapReduce,Combiner & Partitioner

HIVE

-

74Hive Introduction

-

75Hive Introduction

-

76Hive Architecture and Components

-

77Metastore in Hive, Limitations of Hive

-

78Comparison with Traditional Database

-

79Hive Data Types and Data Models

-

80Hive Tables

-

81Managed Tables and External Tables

-

82Importing Data

-

83Querying Data

-

84Partitions and Buckets

-

85Hive Script

-

86Hive UDF

-

87Retail use case in Hive, Hive Demo on Stock Data set

SQOOP

pYSpark

-

92Spark architecture

-

93Spark functional operations like map, map partition reduce etc

-

94What are Dependencies and Why They are Important

-

95First Program In Spark

-

96RDDs (Operations, Transformation, Actions)

-

97Fault Tolerance

-

98RDD Actions, Data Loading

-

99Map Reduce - Key value Pair.

-

100Loading Data in Memory

-

101Memory Layout In Executor

-

102Resource Management - Standalone

-

103Resource Management - Standalone

-

104Resource Management - YARN

-

105Dynamic Resource Allocation

-

106Project on -> STOCK DATA

-

107What's the Problem with RDDs

-

108Caching Data In Spark

-

109DataFrame vs DataSet vs SQL

-

110Simple Selects

-

111Filtering DataFrames

-

112Shuffles and Aggregations DataFrames

-

113Joining DataFrames

-

114Learning about Spark SQL

-

115creating Data Frames, manual inferring of schema

-

116working with CSV files, reading JDBC tables, Data Frame to JDBC

-

117SQL Context in Spark for providing structured data processing

-

118JSON support in Spark SQL

-

119Types of Joins and optimisations

-

120Caching and Persistence

-

121Query and transform data in Data Frames

-

122Broadcasting variables and accumulators

-

123Spark resource allocation and configuration

-

124working with XML data, parquet files

-

125Creating HiveContext

-

126Writing Data Frame to Hive

-

127Shared variable and accumulators

-

128Spark Streaming Architecture

-

129Writing streaming program, processing of spark stream

-

130Request count and Dstream

-

131Multi batch operation, sliding window operations and advanced data sources

-

132Different Algorithms, the concept of iterative algorithm in Spark

DataBricks

-

133Databricks Create A Cluster

-

134How does Apache Spark run on a cluster?

-

135Creating Notebooks & few exercise to do in Py-Spark

-

136How to create and write various scripts and execution

-

137Create modules and invoke from other notebooks

-

138Creating Widgets

-

139File options

-

140Magic commands

-

141Databricks specific commands using dbutils library

-

142Performance Tunning

-

143DAG Discussion

-

144Narrow Dependency

-

145Wide Dependency

-

146Fail Over

-

147Cache & persist

-

148DATA FRAMES

-

149creating Data Frames, manual inferring of schema

-

150SQL Context in Spark for providing structured data processing

-

151JSON support in Spark SQL

-

152Working with parquet files

-

153Reading csv files, user defined functions in Spark SQL

-

154shared variable and accumulators

-

155learning to query and transform data in Data Frames

-

156Creating & executing Workflow in Databricks

-

157Monitoring the Workflow

-

158Debugging the issues using log in Databricks

-

159How to create Cluster in Debricks along with do/don’ts, cost involved in utilizing cluster.

-

160Create cluster / auto scale option / nodes and executors

-

161Job clusters and all purpose clusters

-

162Connection pools setup

-

163Verify driver logs to understand execution of workflow/notebook (DAG)

-

164Shuffles and Aggregations DataFrames

-

165Joining DataFrames

-

166Creating Data Frames, manual inferring of schema

-

167Distributed Data: The DataFrame

-

168SQL Context in Spark for providing structured data processing

-

169JSON support in Spark SQL

-

170Types of Joins and optimisations

-

171Caching and Persistence

-

172Apache Spark Architecture: DataFrame Immutability

-

173Apache Spark Architecture: Narrow Transformations

-

174How to Drop rows and columnsworking with XML data, parquet files

-

175Shared variable and accumulators

-

176Spark resource allocation and configuration

-

177Spark performance tuning

-

178Optimizing spark Jobs, understanding execution plan

-

179Decide executors and executor cores

-

180Catalyst optimiser

-

181Spark Tungsten basics

-

182Handling Null Values Part II - DataFrameNaFunctions

-

183Sort and Order Rows - Sort & OrderBy

-

184Create Group of Rows: GroupBy

-

185Delta architecture / lakehouse / Medallion architecture

-

186Fundamentals of Machine Learning with Spark

-

187Spark Machine Learning components

-

188Stages in the development of a Machine Learning model

-

189Machine Learning Model Definition and Pipeline Development

-

190Model evaluation with PySpark and Databricks

-

191Spark Optimization Techniques

-

192Job Stats Discussions

Generative AI

-

196Introduction to using Generative AI for Data Generation and Augmentation

-

197Generating Synthetic Data with Generative AI

-

198Augmenting Existing Data with Generative AI

-

199Creating Time Series Data

-

200Generating Edge Cases in Data Engineering

-

201Handling PII Data with Generative AI

-

202Balancing Imbalanced Datasets in Data Engineering

-

203Data Augmentation App Walkthrough

-

204Creating Functions for Data Engineering

-

205Running the Web App (GenAI for Data Engineering)

-

206Introduction to using Generative AI for Writing Data Engineering Code with Gen AI

-

207Data Cleaning and Modeling with Generative AI

-

208Documenting Code for Data Projects

-

209Creating Data Schemas, Systems, and Pipelines

-

210Transferring Data with Generative AI

-

211Introduction to using Generative AI for Gen AI Data Engineering Tools

-

212Use ChatGPT for Data Engineering

-

213Build a Data Engineering App with Claude

-

214Custom GPTs for Data Engineering

-

215Custom LLM or Generative AI tools for Data Engineering

-

216Copilot for Azure Data Factory and Gemini for BigQuery

-

217Introduction to using Generative AI for Data Parsing and Extraction

-

218Parsing Data (Data Engineering)

-

219Extracting Data from Web Scrapes and Images

-

220Performing Named Entity Recognition

-

221Activity: Extracting Data from Contracts

-

222Introduction to using Generative AI for Data Querying and Analysis

-

223Querying Data with Generative AI

-

224Optimizing Data Queries

-

225Activity: Developing Data Engineering Query Apps

-

226Running Data Engineering Query Apps

-

227Converting to a Web App with Front-End

-

228Generative AI for Data Enrichment

-

229Enriching Features for Data Models

-

230Data Imputation and Normalization with Generative AI

-

231Imputation for Time Series Data Engineering

-

232Standardizing and Normalizing Textual Data with Generative AI

Who Can Apply for the Data Engineering Course?

•tFreshers and Undergraduates willing to pursue a career in data engineering

•tAnyone looking for a career transition to data engineering

•tIT professionals

•tExperienced professionals willing to learn data engineering

•tTechnical and non-technical professionals with basic-level programming knowledge can also apply

•tProject Managers

•tAnyone looking for a career transition to data engineering

•tIT professionals

•tExperienced professionals willing to learn data engineering

•tTechnical and non-technical professionals with basic-level programming knowledge can also apply

•tProject Managers

What Roles Does a Data Engineer Play?

Big Data Engineer

They design and build complex data pipelines and have expert knowledge in coding using Python, etc. These professionals collaborate and work closely with data scientists to run the code using various tools such as the Hadoop ecosystem, etc.

Data Architect

They are typically the database administrators and are responsible for data management. These professionals have in-depth knowledge of databases, and they also help in business operations.

Business Intelligence Engineer

They are skilled in data warehousing and create dimension models for loading data for large-scale enterprise reporting solutions. These professionals are experts in using ELT tools and SQL.

Data Warehouse Engineer

They are responsible for looking after the ETL processes, performance administration, dimensional design, etc. These professionals take care of the full back-end development and dimensional design of the table structure.

Technical Architect

They design and define the overall structure of a system to improve the business of an organization. The job role of these professionals involves breaking large projects into manageable pieces.

They design and build complex data pipelines and have expert knowledge in coding using Python, etc. These professionals collaborate and work closely with data scientists to run the code using various tools such as the Hadoop ecosystem, etc.

Data Architect

They are typically the database administrators and are responsible for data management. These professionals have in-depth knowledge of databases, and they also help in business operations.

Business Intelligence Engineer

They are skilled in data warehousing and create dimension models for loading data for large-scale enterprise reporting solutions. These professionals are experts in using ELT tools and SQL.

Data Warehouse Engineer

They are responsible for looking after the ETL processes, performance administration, dimensional design, etc. These professionals take care of the full back-end development and dimensional design of the table structure.

Technical Architect

They design and define the overall structure of a system to improve the business of an organization. The job role of these professionals involves breaking large projects into manageable pieces.

•What does a Data Engineer do?

With organizations relying heavily on data to drive growth today, data engineering is becoming a more popular skill. Data engineers are tasked with designing a system to unify multiple sources of business data in a meaningful and accessible way. The typical role of data engineers includes:

•tAcquiring big data sets from different data warehouses

•tCleaning those big data sets and finding any errors

•tRemoving any form of duplications that may occur

•tConverting the cleaned data into a readable format

•tInterpreting data to provide reliable information for better decisions

•tAcquiring big data sets from different data warehouses

•tCleaning those big data sets and finding any errors

•tRemoving any form of duplications that may occur

•tConverting the cleaned data into a readable format

•tInterpreting data to provide reliable information for better decisions

•What are the benefits of taking this Data Engineering course?

This comprehensive data engineering course is designed to provide an introduction to data, a detailed view of the domain and equip you with the skills and techniques to succeed in it. Our course integrates data warehousing, data lakes, and data engineering pipelines to create a comprehensive and scalable data architecture.

•What is the average salary of a Data Engineer?

Today, small and large companies depend on data to help answer important business questions. Data engineering plays a crucial role in supporting this process, making it possible for others to inspect the data available reliably making them important assets to organizations, earning lucrative salaries worldwide. Here are some average yearly estimates:

•tIndia: INR 10.5 Lakhs

•tUS: USD 131,713

•tCanada: CAD 98,699

•tUK: GBP 52,142

•tAustralia:AUD 118,000

•tIndia: INR 10.5 Lakhs

•tUS: USD 131,713

•tCanada: CAD 98,699

•tUK: GBP 52,142

•tAustralia:AUD 118,000

•What will be the career path after completing the Data Engineering Course?

Designing and building data applications is very well regarded in the industry. While becoming a data engineer would be the most obvious route after completing this course, there are several other career paths you could choose:

•tBig Data Engineer: Work with big data technologies like Hadoop and

Kafka to manage data processing tasks.

•tData Architect: Design and manage an organization's data

architecture, ensuring integrity and security.

•tData Analyst: Effectively analyze and interpret complicated data

sets to make informed decisions.

•tBusiness Intelligence Developer: Create and manage BI solutions

using dashboards and reports.

•tBig Data Engineer: Work with big data technologies like Hadoop and

Kafka to manage data processing tasks.

•tData Architect: Design and manage an organization's data

architecture, ensuring integrity and security.

•tData Analyst: Effectively analyze and interpret complicated data

sets to make informed decisions.

•tBusiness Intelligence Developer: Create and manage BI solutions

using dashboards and reports.

•Do Data Engineers require prior coding experience?

Yes, data engineers are expected to have basic programming skills in Java, Python, R or any other language.

•Can I apply for this data engineering course with no technical background?

Yes, you can join this data engineering course even with no technical background. However, it's recommended that you have a basic understanding of object-oriented programming languages and at least two years of relevant work experience.

•Which are the top industries suitable for Data Engineering professionals?

Organizations around the world are looking for ways to leverage data to enhance services, making data engineers a sought-after asset. That being said, some of the more popular industries for data engineers include:

•tMedicine and healthcare

•tBanking

•tInformation technology

•tEducation

•tRetail

•tEcommerce

•tMedicine and healthcare

•tBanking

•tInformation technology

•tEducation

•tRetail

•tEcommerce

•What is the refund policy for this Data Engineering Course?

submit your refund request within 7 days of purchasing your course, 100 % Money will be refunded ( No Question asked )

•After 7 days -No Refund are Allowed in any Circumstances.

•After 7 days -No Refund are Allowed in any Circumstances.

•Are there any other online courses Times Analytics offers under Data Science?

Absolutely! Times Analytics offers plenty of options to help you upskill in Data Science. You can take advanced certification training courses or niche courses to sharpen specific skills. Whether you want to master new tools or stay ahead with the latest trends, there's something for everyone. These courses are designed to elevate your knowledge and keep you competitive in the Data Science field.

Similar programs that we offer under Data Science:

Similar programs that we offer under Data Science:

•Will missing a live class affect my ability to complete the course?

No, missing a live class will not affect your ability to complete the course. With our 'flexi-learn' feature, you can watch the recorded session of any missed class at your convenience. This allows you to stay up-to-date with the course content and meet the necessary requirements to progress and earn your certificate.

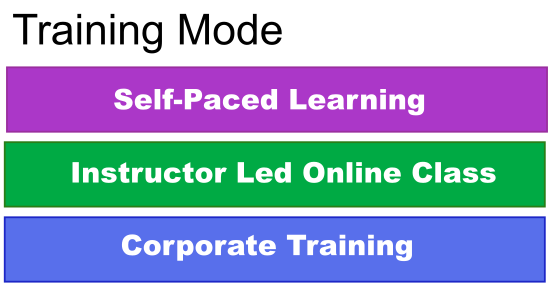

•Does Times Analytics have corporate training solutions?

Yes, Times Analytics for Business offers learning solutions for the latest AI and other digital skills, including industry certifications. For talent development strategy, we work with Fortune 500 and mid-sized companies with short skill-based certification training and role-based learning paths.

Our team of curriculum consultants works with each client to select and deploy the learning solutions that best meet their teams’ requirements.

Our team of curriculum consultants works with each client to select and deploy the learning solutions that best meet their teams’ requirements.

What can I expect from these courses offered by Times Analytics?

Times Analytics’s online Data Engineering courses will validate your skills in the domain and will add value to your resume. The real-life practical applications will help you develop a strong skill set that you can showcase to recruiters. Get the best Data Engineer course certification and excel in your data engineering career.

How will I get certified?

On the completion of the online data engineering online course, and the completion of the various projects and assignments in this program, you will receive your data engineering certification

Which are the top companies hiring in this domain?

The top companies hiring data engineers around the globe are as follows:

•tTata Consultancy Services (TCS)

•tLTI

•tAccenture

•tAmazon

•tInfosys

•tCapgemini

•tTata Consultancy Services (TCS)

•tLTI

•tAccenture

•tAmazon

•tInfosys

•tCapgemini

I don't have any technical background. Can I still enroll in this Data engineer certification course?

Yes, you can easily join the data engineering courses even if you do not have technical experience or are not from a technical background. However, knowing any object-oriented programming language will be helpful.

How much time should I devote to completing this program?

The data engineering courses come with a duration of 2 months of live classes and lifelong access to course material. In this tenure, it is suggested that you devote six to seven hours a week to master the data engineering concepts taught in the online classes.

Topics You will Master

Topics You will Master